Exercise: Basic model skill#

import modelskill as ms

You want do to a simple comparison between model and observation using the ModelSkill.compare method, but the following code snippet doesn’t work.

Change the code below, so that it works as intended. Hint: look at the documentation

help(modelskill.match)

fn_mod = '../data/SW/ts_storm_4.dfs0'

fn_obs = '../data/SW/eur_Hm0.dfs0'

c = ms.match(fn_obs, fn_mod)

/opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/obs.py:79: UserWarning: Could not guess geometry type from data or args, assuming POINT geometry. Use PointObservation or TrackObservation to be explicit.

warnings.warn(

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/matching.py:466, in _parse_single_model(mod, item, gtype)

465 try:

--> 466 return model_result(mod, item=item, gtype=gtype)

467 except ValueError as e:

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/model/factory.py:57, in model_result(data, aux_items, gtype, **kwargs)

55 geometry = GeometryType(gtype)

---> 57 return _modelresult_lookup[geometry](

58 data=data,

59 aux_items=aux_items,

60 **kwargs,

61 )

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/model/point.py:56, in PointModelResult.__init__(self, data, name, x, y, z, item, quantity, aux_items)

55 if not self._is_input_validated(data):

---> 56 data = _parse_point_input(

57 data,

58 name=name,

59 item=item,

60 quantity=quantity,

61 aux_items=aux_items,

62 x=x,

63 y=y,

64 z=z,

65 )

67 assert isinstance(data, xr.Dataset)

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/timeseries/_point.py:105, in _parse_point_input(data, name, item, quantity, x, y, z, aux_items)

104 valid_items = list(data.data_vars)

--> 105 sel_items = _parse_point_items(valid_items, item=item, aux_items=aux_items)

106 item_name = sel_items.values

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/timeseries/_point.py:37, in _parse_point_items(items, item, aux_items)

36 elif len(items) > 1:

---> 37 raise ValueError(

38 f"Input has more than 1 item, but item was not given! Available items: {items}"

39 )

41 item = _get_name(item, valid_names=items)

ValueError: Input has more than 1 item, but item was not given! Available items: ['Europlatform: Sign. Wave Height', 'K14: Sign. Wave Height', 'F16: Sign. Wave Height', 'F3: Sign. Wave Height', 'Europlatform: Sign. Wave Height, W', 'K14: Sign. Wave Height, W', 'F16: Sign. Wave Height, W', 'F3: Sign. Wave Height, W', 'Europlatform: Sign. Wave Height, S', 'K14: Sign. Wave Height, S', 'F16: Sign. Wave Height, S', 'F3: Sign. Wave Height, S', 'Europlatform: Wind speed', 'K14: Wind speed', 'F16: Wind speed', 'F3: Wind speed', 'Europlatform: Wind direction', 'K14: Wind direction', 'F16: Wind direction', 'F3: Wind direction']

During handling of the above exception, another exception occurred:

ValueError Traceback (most recent call last)

Cell In[2], line 4

1 fn_mod = '../data/SW/ts_storm_4.dfs0'

2 fn_obs = '../data/SW/eur_Hm0.dfs0'

----> 4 c = ms.match(fn_obs, fn_mod)

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/matching.py:256, in match(obs, mod, obs_item, mod_item, gtype, max_model_gap, spatial_method, spatial_tolerance, obs_no_overlap)

204 """Match observation and model result data in space and time

205

206 NOTE: In case of multiple model results with different time coverage,

(...) 253 from_matched - Create a Comparer from observation and model results that are already matched

254 """

255 if isinstance(obs, get_args(ObsInputType)):

--> 256 return _match_single_obs(

257 obs,

258 mod,

259 obs_item=obs_item,

260 mod_item=mod_item,

261 gtype=gtype,

262 max_model_gap=max_model_gap,

263 spatial_method=spatial_method,

264 spatial_tolerance=spatial_tolerance,

265 obs_no_overlap=obs_no_overlap,

266 )

268 if isinstance(obs, Collection):

269 assert all(isinstance(o, get_args(ObsInputType)) for o in obs)

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/matching.py:332, in _match_single_obs(obs, mod, obs_item, mod_item, gtype, max_model_gap, spatial_method, spatial_tolerance, obs_no_overlap)

329 else:

330 models = mod # type: ignore

--> 332 model_results = [_parse_single_model(m, item=mod_item, gtype=gtype) for m in models]

333 names = [m.name for m in model_results]

334 if len(names) != len(set(names)):

File /opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/site-packages/modelskill/matching.py:468, in _parse_single_model(mod, item, gtype)

466 return model_result(mod, item=item, gtype=gtype)

467 except ValueError as e:

--> 468 raise ValueError(

469 f"Could not compare. Unknown model result type {type(mod)}. {str(e)}"

470 )

471 else:

472 if item is not None:

ValueError: Could not compare. Unknown model result type <class 'str'>. Input has more than 1 item, but item was not given! Available items: ['Europlatform: Sign. Wave Height', 'K14: Sign. Wave Height', 'F16: Sign. Wave Height', 'F3: Sign. Wave Height', 'Europlatform: Sign. Wave Height, W', 'K14: Sign. Wave Height, W', 'F16: Sign. Wave Height, W', 'F3: Sign. Wave Height, W', 'Europlatform: Sign. Wave Height, S', 'K14: Sign. Wave Height, S', 'F16: Sign. Wave Height, S', 'F3: Sign. Wave Height, S', 'Europlatform: Wind speed', 'K14: Wind speed', 'F16: Wind speed', 'F3: Wind speed', 'Europlatform: Wind direction', 'K14: Wind direction', 'F16: Wind direction', 'F3: Wind direction']

When you have fixed the above snippet, you can continue to do the skill assessment

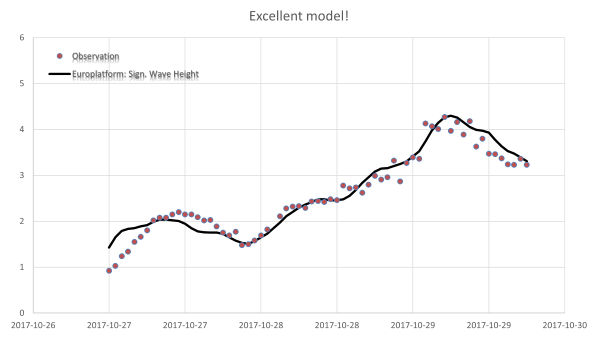

# plot a timeseries of the comparison

# * remove the default title

# * set the limits of the y axis to cover the 0-6m interval

Your colleague, who is very skilled at Excel, wants to make a plot like this one:

# Use the .df property on the comparer object to save the obs and model timeseries as an Excel file ("skill.xlsx")

# you might get an error "No module named 'openpyxl'", the solution is to run `pip install openpyxl`

# calculate the default skill metrics using the skill method

# calculate the skill using the mean absolute percentage error and max error, use the metrics argument

# c.skill(metrics=[__,__])

# import the hit_ratio metric from modelskill.metrics

# and calculate the ratio when the deviation between model and observation is less than 0.5 m

# hint: use the Observation and Model columns of the dataframe from the .df property you used above

# is the hit ratio ~0.95 ? Does it match with your expectation based on the timeseries plot?

# what about a deviation of less than 0.1m? Pretty accurate wave model...

# hit_ratio(c.df.Observation, __, a=__)

# compare the distribution of modelled and observed values, using the .hist method

# change the number of bins to 10