Dependencies and Continuous Integration

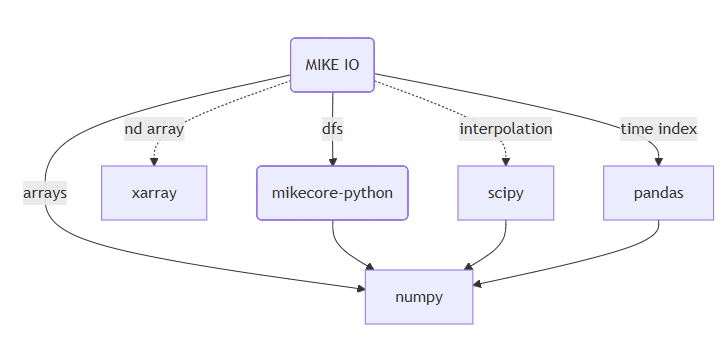

Dependencies

⏩ Dependencies are other pieces of software your project needs to work.

🤔 They save time by reusing existing solutions, but can make your project harder to maintain if they change or are no longer available.

Application

A program that is run by a user

- command line tool

- script

- web application

Pin versions to ensure reproducibility, e.g. numpy==1.11.0

Library

A program that is used by another program

- numpy

- scikit-learn

Make the requirements as loose as possible, e.g. numpy>=1.11.0

Make the requirements loose, to avoid conflicts with other packages.

Dependency resolution

- Python projects often depend on many packages, which may depend on others (“dependency tree”).

- Different packages may require conflicting versions of the same dependency.

- Historically, Python tools did not always resolve these conflicts well, leading to “dependency hell.” 😈

- Ensuring everyone gets the same working set of packages has been difficult, especially for larger projects. 🤪

. . .

Modern tools like uv aim to solve these problems with faster and more reliable 😎

uv

- 🚀 A single tool to replace pip, pip-tools, pipx, poetry, pyenv, twine, virtualenv, and more.

- ⚡️ 10-100x faster than pip.

- 🗂️ Provides comprehensive project management, with a universal lockfile.

- ❇️ Runs scripts, with support for inline dependency metadata.

- 🐍 Installs and manages Python versions.

- 🛠️ Runs and installs tools published as Python packages.

- 🔩 Includes a pip-compatible interface for a performance boost with a familiar CLI.

- 💾 Disk-space efficient, with a global cache for dependency deduplication.

- ⏬ Installable without Rust or Python via curl or pip.

- 🖥️ Supports macOS, Linux, and Windows.

Dependency management

uv is the recommended tool for managing a Python project including dependencies.

Example of pinning versions:

pyproject.toml

dependencies = [

"numpy==1.11.0",

"scipy==0.17.0",

"matplotlib==1.5.1",

]. . .

Or using a range of versions:

pyproject.toml

dependencies = [

"numpy>=1.11.0",

"scipy>=0.17.0",

"matplotlib>=1.5.1,<=2.0.0"

]. . .

Install dependencies:

$ uv syncDevelopment dependencies

I.e. dependencies needed for testing, building documentation, linting, etc. not needed to run the package.

pyproject.toml

[dependency-groups]

dev = [

"pytest>=8.4.1",

]Dependency management using uv

- Add a dependency:

$ uv add matplotlib- Remove a dependency:

$ uv remove seaborn- Add development dependency:

$ uv add --dev pytestCreating an installable package

Create a new library project:

$ uv init --libStart a Python session:

$ uv run python>>> import mini

>>> mini.foo()

42. . .

Run tests:

$ uv run pytest

...

tests/test_foo.py . [100%]

=============== 1 passed in 0.01s ===============Virtual environments

- Creates a clean environment for each project

- Allows different versions of a package to coexist on your machine

- Can be used to create a reproducible environment for a project

- Virtual environments are managed by

uv

Continuous Integration (CI)

Running tests on every commit in a well defined environment ensures that the code is working as expected.

It solves the “it works on my machine” problem.

Executing code on a remote server is a good way to ensure that the code is working as expected.

Example of CI services:

- GitHub Actions

- Azure Pipelines

- Travis CI

GitHub Actions

- Workflow are stored in the

.github/workflowsfolder. - Workflow is described in a YAML file.

- YAML is whitespace sensitive (like Python).

- YAML can contain lists, dictionaries and strings, and can be nested.

$ tree mikeio/.github/

mikeio/.github/

└── workflows

├── docs.yml

├── full_test.yml

├── notebooks_test.yml

├── perf_test.yml

└── python-publish.ymlWorkflow example

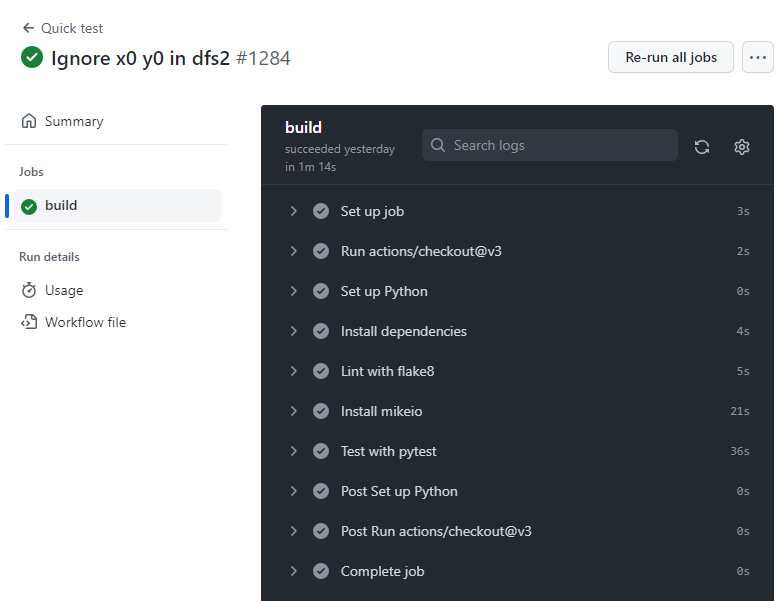

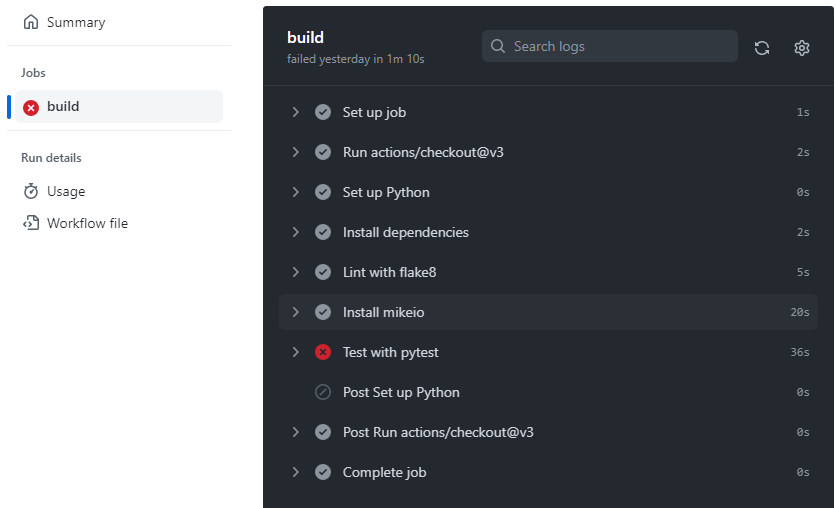

name: Quick test

on: # when to run the workflow

push:

branches: [ main]

pull_request:

branches: [ main ]

jobs: # which jobs to run

build: # descriptive name 🙄

runs-on: ubuntu-latest # on what operating system

steps:

- uses: actions/checkout@v3

- name: Set up uv

uses: astral-sh/setup-uv@v6

with:

python-version: "3.13"

- name: Install dependencies

run: |

uv sync

- name: Test with pytest

run: |

uv run pytest🙂🚀

☹️

Benefits of CI

- Run tests on every commit

- Test on different operating systems

- Test on different Python versions

- Create API documentation

- Publish package to PyPI or similar package repository

Triggers

pushandpull_requestare the most common triggersschedulecan be used to run the workflow on a scheduleworkflow_dispatchcan be used to trigger the workflow manually

Jobs

- Operating system

- Python version

- …

...

jobs:

build:

runs-on: ${{ matrix.os }}

strategy:

matrix:

os: [ubuntu-latest, windows-latest]

python-version: ["3.10","3.13"]

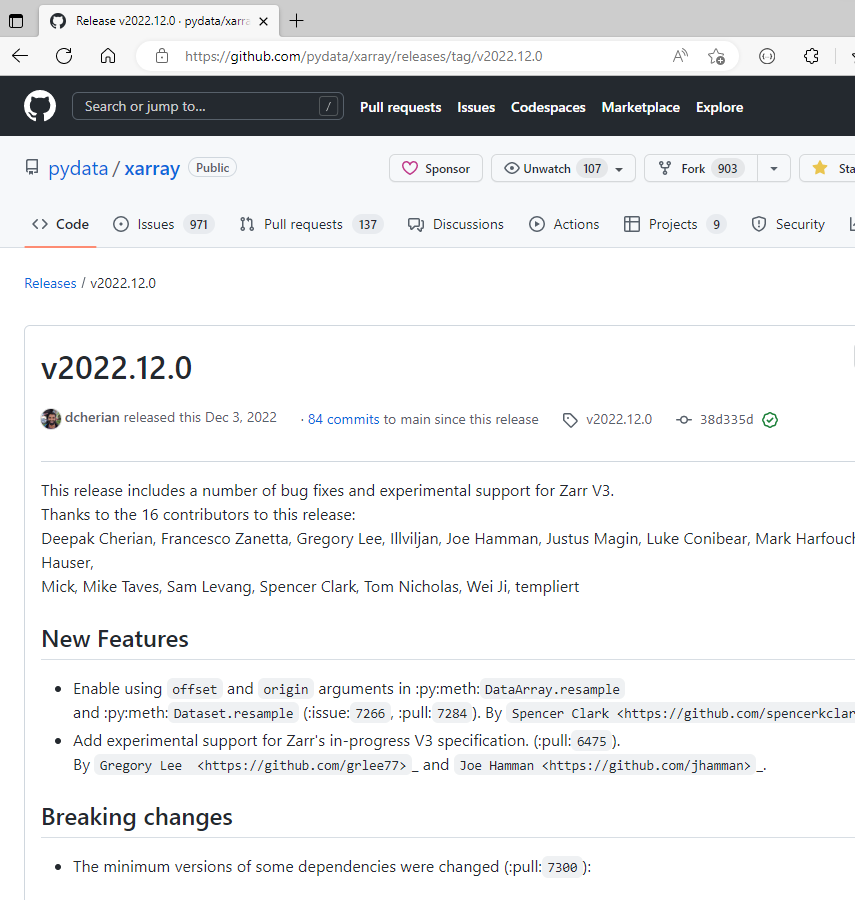

...GitHub Releases

GitHub releases are a way to publish software releases.

You can upload files, write release notes and tag the release.

As a minimum, the release will contain the source code at the time of the release.

Creating a release can trigger other workflows, e.g. publishing a package to PyPI.

Summary

- Application vs library

- Use a separate virtual environment for each project

- Use GitHub Actions to run tests on every commit

- Use GitHub Releases to publish software releases